Inbound Damages Process Modernization

Role: UX & AI Research Lead | Client: Global Logistics Network

Brief:

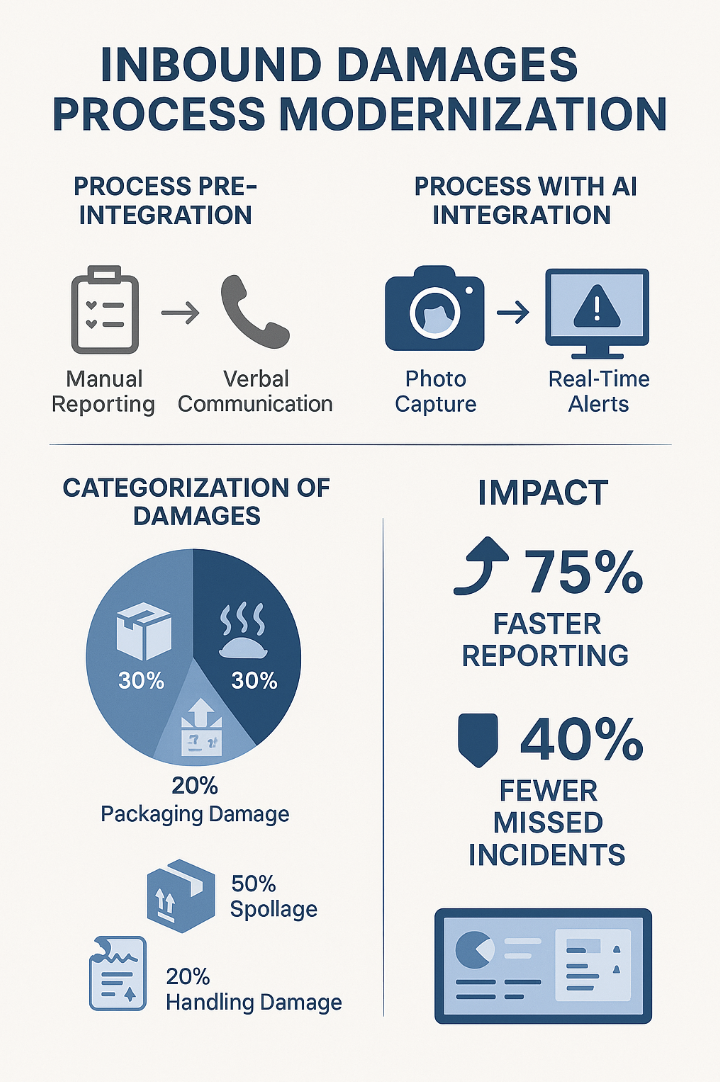

Developed and deployed an AI-powered damage detection and reporting workflow for inbound shipments, replacing manual paper-based and delayed-reporting methods. The system uses computer vision and anomaly detection algorithms to identify, log, and escalate potential damages in real-time—improving operational speed, accuracy, and compliance.

Product Framing & Success Criteria

Product Goal: Reduce inbound damage reporting latency and improve classification accuracy by replacing manual reporting with a real-time, AI-powered detection and escalation workflow.

Primary Users: Dock workers capturing inbound shipments, supervisors reviewing incidents, and quality assurance managers responsible for SLA compliance.

Business Constraints: High-volume inbound flow, strict customer SLAs, data quality and labeling accuracy requirements, regulatory and contractual audit needs, and limited tolerance for false positives.

Success Metrics: Reporting time per incident, classification accuracy, SLA compliance rate, auditability of AI decisions, and user adoption across sites.

Key Objectives

Reduce reporting latency and human error in damage documentation.

Leverage computer vision to automate damage recognition and severity classification.

Ensure compliance with internal quality assurance standards and customer SLAs.

Integrate governance protocols for data labeling accuracy, auditability, and bias mitigation.

Process & Responsibilities

Conducted multi-site workflow analysis to document current-state damage reporting methods.

Partnered with data scientists to train and validate AI damage detection models using historical image datasets.

Developed a role-based digital reporting tool that integrates with the AI model for real-time damage alerts.

Applied AI governance practices by implementing confidence scoring thresholds, bias reviews, and audit trails for all model decisions.

Led usability testing to optimize dashboard interface for dock workers, supervisors, and quality managers.

Facilitated change management and training for operational staff.

Key Product Decisions & Tradeoffs

Automation vs. Human Verification: Used AI for initial damage detection and severity scoring while retaining human review for final confirmation to balance speed, accuracy, and accountability.

Sensitivity vs. False Positives: Tuned confidence thresholds to reduce over-flagging while ensuring critical damages were escalated promptly.

Explainability vs. Model Complexity: Prioritized interpretable model outputs and confidence scoring over more complex architectures to support auditability and stakeholder trust.

Scalability vs. Data Quality Control: Standardized labeling and governance processes to support scale across sites while maintaining model performance and bias mitigation.

Outcome

Reduced reporting time from hours to under 2 minutes per incident.

Increased accuracy of initial damage classification by 88% compared to manual reports.

Delivered a governance-compliant AI pipeline with explainability features and audit logs.

Improved SLA compliance and customer trust through consistent, verifiable reporting.